This update outlines recent work I've done building an interpreter for an embedded target. This was hinted at in previous posts on this page, and is now running in a practical application on a 16-bit processor. We normally think of interpreters (refer to Wikipedia articles on the Interpreter Pattern, and Interpreters in Computing) as carrying out three tasks: deserialization, interpretation, and serialization. Deserialization is the process of parsing your source code in serial form, either as a disk file or as a stream of characters typed at a command prompt, and converting it into a representation of your program in the computer's memory as a composite structure of tokens. Interpretation is the process of traversing the program in memory and taking action based on the tokens found, typically performing computations and interacting with peripherals. Serialization is the process of traversing the program in memory and expressing it as a source file on disk, or as a stream of characters on the console. In principle serialization and deserialization are the inverse of each other, which can be verified with a round-trip comparison test.

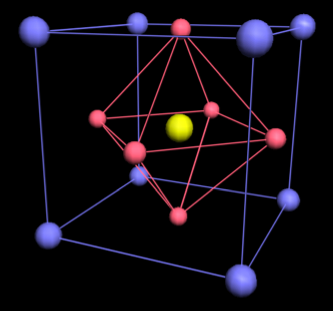

Many variations on this theme are possible, some of which are downright surprising. See for example Pascal Script, which is used in Inno Setup and other programs. This work however is focused on the Abstract Syntax Tree (AST) approach. An obvious way to obtain a running program would be to type a file of source code, write a parser to deserialize it into an AST, and then write an interpreter to traverse the tree. What I've done is a bit less obvious. I've statically instantiated enough tokens, including both composite and terminal nodes, to represent the AST. Then I compose them into an AST during initialization by setting pointers in linked lists owned by the composite nodes. Each composite node can have a list, and except for the root node must also be on a list. Each terminal node must be on a list, but cannot have a list. The program is run by asking the root node to express itself. This means having the root node run its entry code, ask all subordinate nodes to express themselves recursively, and then run its exit code. At this point all nodes in the AST will have been traversed and the program is finished.

Notice that we don't yet have a text representation of the program we just ran. In fact, we don't even need a serializer or deserializer for the case where the program is fixed and never changes. Notice also that the AST really is abstract, in that it can run on any combination of hardware and software that can represent and traverse the tree. The interpreter described here was coded in EC++ to run on a 16 bit microcontroller. I've added a serializer for convenience, which expresses the AST as JSON text. JSON maps well to the internal representation of the AST in memory, but you could certainly use XML or some other language of your choosing. You can even create a new language from scratch that meets your needs. This serves to give a record of the program implemented in the AST, and also allows one to capture the complete state of the program for comparison before and after execution. When the need arises to send new programs to the target at runtime, just implement a deserializer that can parse the JSON text and compose a matching AST. Details of the AST are based on a previous article posted on this web site.

So far, I've been writing about an interpreter that starts at the root node and traverses the AST recursively in one direction until every node has been visited. But consider a generalization where the interpreter can navigate from any node to any other node by following the links in either direction. This then implements a hierarchical state machine where the instantaneous state is uniquely represented by the node that currently has control. You navigate from one state to another by traversing the tree along a path which includes both the start and end points of the desired transition. Any node in the tree can be reached from any other, so long as you allow the entry and exit code of each node along the path to run as needed. The path is found by stepping up the hierarchy until the target node can be "seen" below, then stepping back down until the target is reached. A common idiom in this technique is to place a parent node which enables and configures the hardware needed for a particular type of measurement on entry. Child nodes are then placed beneath which carry out the desired set of measurements. After measurements are complete, the parent node returns the hardware to its idle state on exit. Measured data can be appended to the currently active node in memory while it is being taken. Post analysis and report generation are then done while traversing the tree again after measurements are complete.

Here is a fragment of JSON text illustrating the concept. This represents a loop that measures data on three channels, repeating ten times, with an interval of 60 seconds between iterations:

|

Nodes are represented as dictionaries, while lists owned by the composite nodes are represented as arrays. All nodes shown in this fragment are in fact composite, showing the AST before any measurements have been done. After measurements are complete, each measurement node will have a list of data points attached. These could be simple decimal numbers, but will more likely be dictionaries containing the measured value, channel status, and a time stamp. Serialization then yields a complete program along with the data measured by each node.

If you are new to this page, you'll want to start with the topic What is a "Measurement Appliance"? below. If you've visited before, welcome back and let me share with you some results I've obtained recently. First of all, it works. The basic concept of a measuring instrument which exposes a file system interface works beautifully, without drivers or installation of any kind, and across all platforms. What's so surprising about this is not how well it works, but how easy it was! Am I still the only one working on this? Come on, guys, we've got work to do. Here's what I've accomplished so far.

I've been working recently with a Toradex Colibri-based measuring instrument which exposes a file system interface through both USB and Ethernet. The Colibri module has the ability, along with many other embedded targets, to run both USB client and host drivers at the same time, through two different USB ports. But now that I've had a chance to play with it, there are some limitations that rule out some of the ideas in my original manifesto given below. It turns out that local volumes exposed via USB to an upstream host computer aren't actually mounted locally at the same time. The reason for this is that the interface is implemented as a simple pass-through, with the host computer managing block operations through SCSI mass storage commands. So there is no way for the target to communicate changes in the directory to the host, or to even know what's in the directory after the host has made changes! That rules out using a USB file system for real-time measurement and control. It may still be possible however to use the file system in non-real-time applications (data loggers, for instance) by signaling that the device has been "ejected" so the host will dismount it. The target can then mount the volume locally, obtain the directory, and process any commands ready for input or output. After the target is done, it could then dismount the volume locally and signal the host that the device has been "inserted" again. This seems to be what digital cameras and voice recorders are doing with their mass storage.

A much more promising route, at least with the Colibri processor, is to use Ethernet to connect the target to one or more host PCs. A simple batch file (WinCE has a command shell processor, much like a Windows DOS box) can run in the background and watch the name of a zero-length file in a shared directory. This file name represents the state of a simple state machine, where each actor knows which states it can handle, and then changes the file name to indicate that it will take the next step. This operation is guaranteed atomic by the network file system, so there will never be a tie between two actors. Note that while it's convenient to have the shared directory resident on the target, it can actually be anywhere (on the ground, or in the cloud) that authenticated actors can access it. Obviously, there can be any number of actors and observers, evaluating and responding to the system state as it evolves. Here are the details of a simple demo I've been showing to my colleagues.

The Colibri has a directory called "\Temp" in which I've placed a zero-length file named "idle". VBA (Visual Basic for Applications) code running in an Excel spreadsheet on the host PC changes the name of this file to "run", in response to the user clicking the mouse on a button in the spreadsheet. I've previously mounted the directory over the network and assigned it a Windows drive letter. When the target sees the name change to "run", it makes a series of measurements and writes the results to the same directory as comma-separated columns in a text file. After the output files have been written and closed, the target then renames the "run" file back to "idle", which is seen by the host PC. The VBA code, which was waiting for this change, loads the results into the active worksheet and displays them in a chart. This is all done without special drivers, without external code, and with no proprietary interface cards or dongles. It just works because all it's using is the file system, which was there all along.

Almost everyone I've demo'd this for has understood the concept immediately, and is now planning to incorporate it into their own work. I've had several complaints however about the potential for security risks. For instance, a bad guy with a packet sniffer and physical access to your network could watch your traffic and see all your measurement data. Or a seriously bad guy could hack your network and send spurious commands to your instrument causing all kinds of havoc. While these are important risks to keep in mind, I'm afraid that train has already left the station. It should be obvious that your network must be properly secured before you start connecting measuring instruments to it. And don't forget to set a unique network password for each instrument.

One colleague has cautioned me about a risk I hadn't considered. By making programming for measuring instruments so easy, I'm actually lowering the bar for the level of skill and training required to get useful results. So a novice could easily get in over their head and make all kinds of mistakes, with potentially disastrous consequences. Here too, I think I see a train that is far from the station. Novices have been making mistakes while writing test and measurement software for many years, so I don't think the measurement appliance concept will make their mistakes any worse. Although I do have to admit that, just as measurement appliance will make skilled practitioners more productive, it will make novices more so as well. What do you think? All comments and criticisms are welcome, at the email address in the graphic at the bottom of this page.

A "Measurement Appliance" is simply a measuring instrument which exposes a file system interface. This is my definition, which I'm using to promote a new paradigm for data acquisition, measurement, and control. The concept is quite simple, based on the current generation of handheld devices which expose a file system interface via USB (Universal Serial Bus). USB itself is a flexible and robust interface which allows portable devices to be mounted as volumes on computers running a wide variety of operating systems. It should be obvious however that you don't have to use USB in order to expose a file system interface. Other protocols like ftp (file transfer protocol) have been around a lot longer, but for some reason have not yet been implemented in measuring instruments. Well, why not? Automated test and measurement has been with us since well before 1977, when Hewlett-Packard introduced their microprocessor-based model 3455A DVM (digital volt meter) [Gookin, 1977]. The HP-3455A was interfaced via what was then called HPIB (Hewlett-Packard Interface Bus), and would soon morph into GPIB (General-Purpose Interface Bus). Surely 30+ years should have been long enough for instrument vendors to figure out how to do a file system. What could possibly be holding them back? New USB devices like digital cameras, camcorders, voice recorders, smart phones, and so on often expose a file interface. Just plug them into any computer and a new volume is mounted, often with a hierarchical directory structure containing media files for sounds, pictures, video, and sometimes even containing the user documentation for the device in the form of a PDF file! This typically happens without downloading any drivers or proprietary software. That's because modern computer operating systems are built around the file system, and already know how to handle it smoothly.

Imagine a measuring instrument that lets you read and write all of its settings via a simple text file. Copy the text file to your hard drive to capture a snapshot of the settings, then copy another file back to the device to restore settings to a previous state. Edit the text file to modify the settings. Read back a text file to see a list of errors. Or read its configuration and calibration history in XML (eXtensible Markup Language) format. Print a hard copy using any XML viewer. Or trigger a measurement cycle by touching a particular file, Unix-speak for updating the access and modification times for a file. Open a measured data file in CSV (comma-separated values) format directly in Excel or other spreadsheet program. Type a list of measurements to be done at timed intervals, save it to the device, then disconnect from the computer. Come back a week/month/year later and read the logged results by copying the data file from the device. Notice that I've just described all the things you would normally do with a data acquisition device, with no mention of any proprietary drivers or application software. That's because none are needed! So you are free to choose the programs you like the best for handling text files, tabular data, and nested structures like XML. It goes without saying that all common operating systems are immediately supported as long as they can mount your device as a volume. And just as with any flash drive, you can move your device from one operating system to another with no particular difficulty.

If your device would benefit from a specialized application program, you can write your program in any language that supports standard file system calls. So you want to write in Java? VB, VBA, or VBScript? Perl, C, or C++? Fortran, Lisp, or even LabView? DOS batch files!? Yes, you can use any or all of them by making standard file system calls to the volume where your device is mounted, the "drive letter" in Windows-speak. The code running on the host computer can be compiled, interpreted, or assembled according to your preference. It can interact with as many measurement devices as your computer can mount as volumes. Your code can even interact with the larger world through communications or via the internet. If you choose to do so, your measurement appliances can be shared over a LAN (local area network) or WAN (wide area network) by the host computer, using all of the security and authentication protocols that are built into modern operating systems. This functionality is free, or at least already paid for. Now what about the code running on the measurement appliance? This is where things start to get interesting. The instrument vendor will have their own cross-development environment appropriate for the chosen target, with a code repository, version control, defect tracking, and all the niceties. But customers will also want to be able to write code to run on the target. A vendor could choose to make available an API (application programming interface) that allows direct access to the measurement hardware for code running on the target. But they could also choose to keep the hardware private, and instead require programs to interact with hardware through the file system, just as the user would do if setting things up manually. In this way, the user has a simple and direct path from manual setup to programmed operation. Let me stress this last point by reiterating it in a list of steps a user could follow to develop a stand-alone application:

Consider for a moment all the different programming environments you've used to write code for embedded processors. Now picture if you will an interpreter, compiler, or assembler that runs on the target. You type your source text into any editor running on the host computer, then save it on the target to be interpreted, compiled, assembled, or whatever. Read back a text file from the target containing any errors encountered. After you empty the error list, your object code (or intermediate code if using an interpreter) is now resident on the target, ready for execution. And you didn't have to install any development tools on the host. But what about debugging, you might ask? I'm not proposing running a shell or command interpreter on the target, nor do I envision an IDE (integrated development environment) on the host, so debugging will of necessity be rather primitive. Now think about the best code you've ever written, that had zero defects and went forever before needing revisions. I'm guessing that it was written with only primitive debugging tools, like printf or println, or even an oscilloscope and some blinking LEDs. I once wrote and debugged some 68k assembly code with nothing more than an audio beeper to find out what was happening, and that code ran for its entire life cycle with no changes whatsoever! I'm not trying to brag here, just pointing out that simple, even primitive debugging tools are all you need to do your very best work. The beauty of having a file system resident on the target is that you can write all kinds of debugging code that saves messages in a text file, and costs almost nothing in terms of execution speed or communications bandwidth. What happens if your code crashes the target? Just do a soft or hard reset as needed to restore communications, and read back the debug file your application wrote to see what happened. Of course, this is all a pipe dream as of this writing (May 2010). Come back in a year or so to find out how the real thing works.

Files on the measurement appliance may be real or virtual, but what does that mean? Real files are those for which the data to be read or written exists in literal form on the device. A text file, for instance, would be contained in a linear sequence of bytes in memory, one byte per text character. To interact with a real file, the device looks up the start address and length, and reads or writes the bytes one at a time. Virtual files on the other hand are those for which the data to be read or written is encoded or distributed on the device in such a way that it must be decoded or agglomerated before presenting it to the host. An obvious example would be a data log file, which might appear in memory on the device in a proprietary binary format to save space, but would be expanded to human-readable text when presented to the host.

I suspect that instrument vendors feel as if they are bound in chains to their installed base of legacy users and applications. Programmed and manual control by way of a file system interface is just too different from what they've been doing. They are afraid of making it too easy for their users (that's us!). Clearly, introduction of file-based instruments would soon make all existing models obsolete, gutting their sales and leaving today's experts scrambling. This is what business school types call a "disruptive technology" [Bower, 1995], one that would be certain to get a low score in any focus group made up of existing large users of test and measurement equipment. Simply put, I think that Agilent, Danaher, Fluke, Keithley, Lakeshore, National Instruments, Stanford Research, Tektronix, and on and on are all waiting for someone else to go first. They are waiting for you and me to step up and show them the way. That is the purpose of this web site and the mission of this project, to demonstrate what can be accomplished and what it takes to get there. Existing vendors can pursue this if they choose to. Or new vendors might spring up to take their place.

Some vendors have started to think about new ways to use USB-connected devices, but haven't yet realized that they can expose a file system interface. Measurement Computing, for instance, now offers their DAQFlex Open-Source Software Framework which exposes a simple message-based programming interface on a variety of operating system platforms. As long as you program to their API and they continue to maintain drivers for your platform, your message-based data acquisition and control code should continue to run. And Agilent has won the 2009 Design News Golden Mousetrap Best Product Award for a line of modular USB-connected instruments that implement the message-based USBTMC-USB488 standard, compatible with Microsoft Windows operating systems only. Agilent would like to think that this represents the future of data acquisition, but the fact is that all message-based interfaces actually represent the past. Big firms like Agilent pin their hopes on standards-based computing [Solomon, 2008] while forgetting that successful standards evolve out of disruptive technologies that worked.

It should be obvious that changing the direction of a $1B global industry can't be done overnight. But a journey of many miles begins with a single step [Lao Tzu] so let's get started. Here are some of the things I'll be doing to get this project off the ground, in more-or-less the order presented:

It depends. In my project documented here I'll be following an evolutionary path, to allow a step-by-step process which begins where I am today and winds up where I want to be. But one could also make a clean break with the past and start with the end goal, making a revolutionary change all at once in a single step.

I hope so! These ideas are so obvious that there should be many individuals and groups around the world all working on this at the same time. Eventually, one or more of them will get noticed and standards will start to emerge. The best case is where all devices and vendors converge on a universal scheme which allows easy integration of systems through interoperability, and easy enhancement through adding of new features without breaking old ones.

The Teletype ASR-33 and KSR-33 were the prototypes for connecting to measuring instruments, supporting a 72 character command line. Then the Lear Siegler ADM-3A video terminal helped a little by expanding the command line to 80 characters. Some other terminals allowed only 64 characters on a line, a nice round number in binary math but barely enough for simple measurements. The Apple II and BBC Micro where among early automation controllers. But all were based on a command line structure, with queries and replies. Your command was typed on a single line, sometimes allowing the backspace or delete key, sometimes not! When you hit ENTER the command was parsed and executed, unless an error occurred. A single line reply would then be sent to the terminal, confirming successful completion of the command, returning a measured value as text, or possibly returning an error message. This simple call-and-answer protocol is the basis of almost all IEEE-488 and RS-232 communications. Believe it or not, most modern measuring instruments are still bound by this legacy interface. Some new internet-capable instruments have even carried the command line interface forward by making it available via telnet.

Computers of all types have standardized universally on their file systems as the interface of choice for most tasks. On essentially all computers, the file system interface is implemented through system calls which let you, among other things, open and close files, read and write them, set and get their attributes, traverse (that is, list) a directory or directory tree, etc. The file system is robust and flexible, and will not (can not) be changed on a whim that would make your device obsolete. Many hardware interfaces have come and gone over the years, but the file system is here to stay.

"Measurement servers are defined as a class of solution that combines physical measurements with client/server computing. Real-world measurement data is captured, analyzed and shared with users and other tools over the network using a variety of open-systems standards." [Peters, 1996]

This definition from Hewlett-Packard reveals that the key concept here is client/server computing. In other words, it's a data base, not a file system. This strategy can be useful and effective in enterprise management systems where one might be interested in, for instance, shop floor data collection, or order entry and fulfillment. In some cases it is possible to adapt client-server technology for use in an R & D (research and development) lab, but that's not what I'm proposing here. "Measurement Appliance" is all about the instrument, and does not try to constrain what you do with your measurement data once you have it. One could certainly connect any number of measurement appliances to a measurement server, so that clients could interact with them using common data-base commands. For an interesting contrast, National Instruments defines a measurement server as

"a networked computer that is capable of managing large channel counts and performing advanced datalogging and supervisory control. You can also use them to warehouse your data and turn your measurements into the information you need to run your operation efficiently." [Chesney, 2000]

In spite of this clueless definition, it seems that National is enjoying some success in the field with their networked solutions.

I'm a coauthor on a suite of four patents which kicked off the move to USB for measurement and control devices. These patents have now been cited more than 1000 times, often by Fortune 500 companies. But they were written in the early to mid 1990s, before we knew how to put a file system on a simple embedded device. The landscape today is very different and I believe it's time to get started now, so we can enjoy the benefits of file system based embedded applications before it's time to retire! I'm expecting no support at all from established instrument vendors, but am hopeful that new ventures will see this as a way to differentiate their products, while pleasing and exciting customers at the same time.

Of course. A trend has begun recently to make computers more aware of their surroundings by adding sensors for such things as weather (temperature, barometer, humidity), location (GPS, global positioning system), and orientation (3-axis gyroscope and accelerometers). Additional sensors such as an electronic nose for monitoring air quality may soon be added to the list. One can imagine defining a unique API tailored for each sensor, but wouldn't it be nice if they could all be accessed through a common file system interface instead? So you could install a variety of sensors and, without worrying about drivers or proprietary application programs, scan the volume list manually or in a program to see and interact with whatever is there. It's something to consider.

Most of the work cited here is, of necessity, from the related field of measurement servers, since the field of measurement appliances doesn't exist yet. If you have published work related to measurement appliances, please send an email to the address in the graphic at the bottom of this page, so I can cite it here.

I am placing the concepts and ideas presented on this page in the public domain, for the benefit of scientists and engineers. Please remember that only you can determine if these ideas are suitable for your application. Feel free to post a link to this page on your site, and if your site is relevant I'd be happy to post your link here. Comments and contributions to this work are always welcome. You can send email to the address in the graphic at the bottom of this page. I do not claim any trademark interest in the words "Measurement Appliance", and believe they should apply generically to all devices of the type described here.